SYNOPSYS (SNPS) vs. Dassault Systèmes (DSY): AI unlocks the Impossible Materials Theory

How Enterprise SaaS is reloading for Agentic AI

0. Preface

Lately, I’ve been fascinated by the work of Prof. Markus J. Buehler. He works on something called “Impossible Materials.” Basically, he uses AI to design structures that shouldn’t exist in nature—things that are incredibly light but stronger than steel.

Now, I’m not a scientist. I don’t know much about chemicals or atoms. But I know one or two things about AI and Data Management. And what I see in his work is a beautiful metaphor for the stock market today. But it’s not as easy as one might think. You cant just PROMPT the AI to detect new materials. In it’s raw form, AI can’t invent new things. And then there is hallucination. We will talk in this deep dive, how to use modern tools effectively.

Prof. Buehler shows us that it’s not just about what something is made of, but how it is put together. If the structure is smart, it can survive anything.

Right now, everyone is talking about “SaaSmageddon.” SaaS stocks have crashed and many investors are running away in fear. They see a glass that is half empty. Or even less.

I see the opposite. I see a glass that is half full. Or more. Because of this crash, the “Impossible Materials” of the software world are finally on sale. We are in a rare moment where we can buy the strongest, most essential companies for a bargain. We just have to be smart enough to pick the ones with the right “structure.”

SaaSmageddon isn’t the end. It’s the best entry point we’ve had in years for many stocks. Let’s go find the right ones.

Please note, that there is also a Podcast version of this article:

Part I: The New Industrial Paradigm

1.1 Introduction: The Shifts of January 2026

By late January 2026, the global industrial software market has split into two distinct camps. This divide isn’t just about market value; it’s about two completely different philosophies on how to digitize physical objects. The finalization of Synopsys’s acquisition of Ansys, Ticker NASDAQ:SNPS, in mid 2025, marks the formal commencement of the “Silicon to Systems” strategy, creating a singular entity capable of simulating the physics of the microchip and the macro-system with equal weight. Synopsys will uncover their first Ansys joint tools on the Synopsys Converge in March 2026. Simultaneously, Dassault Systèmes, Ticker DSY.PA, has entrenched itself in a defensive but visionary counterpart toward the “Generative Economy,” launching its “3D UNIV+RSES” strategy to address headwinds in the life sciences sector and capitalize on the sovereignty-critical European market.

I regularly write on X about Synopsys and the Ansys merger and I am also following Dassault Systèmes closely for years. I am confident enough, that now is the time to dig even deeper.

This report is a comprehensive analysis of this new reality. It analyzes the three forces currently reshaping the engineering world: the operationalization of Agentic AI in materials science, which has transitioned from academic theory to industrial productivity; the onset of “SaaSmageddon,” a radical restructuring of software monetization from static seats to dynamic consumption tokens; and the big contrasting financial trajectories of the two heavyweights as they navigate the fiscal realities of 2026.

The catalyst are already there. They may be hidden and buried in financial reports. But thats the advantage we have vs. the “Stock Screener Guys”. We just need to find them.

The analysis draws heavily on the pioneering theoretical work of Professor Markus J. Buehler at MIT, whose 2024–2025 research into agentic graph reasoning provides the intellectual bedrock for the next generation of industrial software. It places side by side this academic work with the commercial realities of Synopsys’s debt-laden integration of Ansys and Dassault’s methodical migration of its massive user base to the cloud.

Buehler is not just another scientist. He is heavily awarded. His work is the fundament of the this Deep Dive. Please take some time and read the link, I provided above.

Buehler argues:

For most of history, discovering new materials was largely an Edisonian process – researchers mixed ingredients and tested results in a slow loop of trial and error, often stumbling on advances by chance. In fact, serendipity and “cook-and-look” experimentation were the norm that developing a novel material (say, a new alloy or polymer) could take decades of work and a fair bit of luck. A common adage was that it takes 10-20 years or more for a material to go from initial discovery to commercial use.

We now stand in an era where the integration of AI, simulation, and manufacturing allows us to design materials with a confidence and speed that was unthinkable a generation ago. The field’s history shows a pattern of surprise moments – from realizing simulations could replace massive tests, to seeing an alloy go from computer to cockpit in six years, to witnessing an AI invent a material structure that a printer immediately brings to life. Each of these milestones has built towards the present, where atoms-to-product design is not science fiction but daily practice in leading labs and companies.

The impact is profound: innovation cycles are shorter, the material possibilities are broader, and the economic gains are significant for those who embrace the approach. Perhaps more excitingly, it unlocks creativity. Engineers and scientists are no longer constrained to what they can directly prototype or intuit; they can explore “virtual worlds” of materials, guided by algorithms, and then manifest the best ideas in reality. This synergy of human creativity and artificial intelligence, grounded in solid physics and enabled by cutting-edge manufacturing, is ushering in a new era of accelerated innovation in materials design.

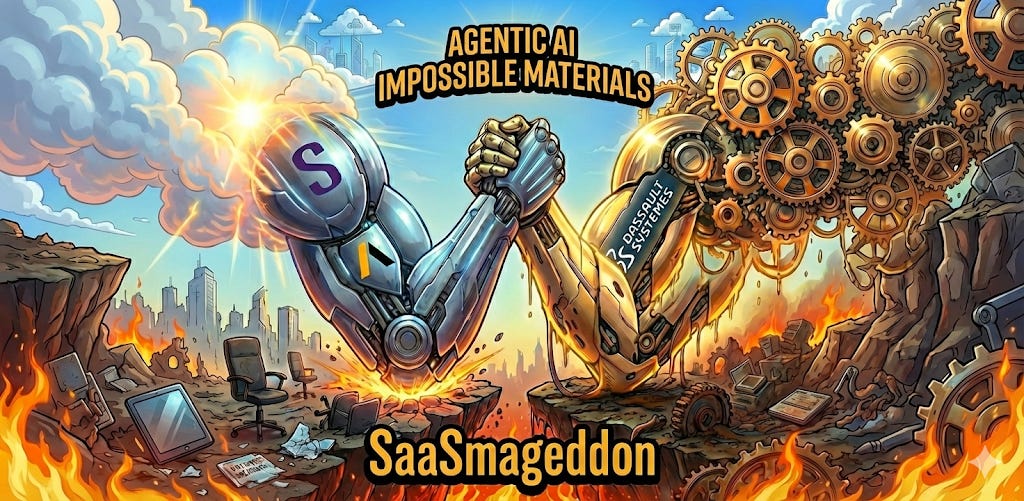

1.2 The Macro-Strategic Context

The industrial software market in 2026 is no longer about “Computer-Aided Design” (CAD) or “Computer-Aided Engineering” (CAE). Those terms belong to the previous decade. The current battleground is the “Virtual Twin Experience” (Dassault’s nomenclature) versus “Silicon to Systems Design” (Synopsys’s nomenclature). Both concepts rely on a shared premise: the physical world is becoming a subset of the digital world.

1.2.1 Synopsys: The Vertical Leader

Synopsys has executed a strategy of vertical integration. By acquiring Ansys for approximately $35 billion, it has secured the “physics ground truth” for the electronics industry. This acquisition has been discussed in tech forums for quite a long time as a possible perfect match and a logic evolution step. Modern high-performance computing, autonomous vehicles, and 5G/6G infrastructure require chips that are not just logically correct (the traditional domain of Synopsys EDA) but physically robust against thermal, mechanical, and electromagnetic stress (the domain of Ansys). The merger validates the thesis that one cannot design a 3nm chiplet-based system without simultaneously simulating the warp of the packaging substrate and the airflow of the datacenter cooling system.

1.2.2 Dassault Systèmes: The Horizontal Visionary

Conversely, Dassault Systèmes pursues horizontal ubiquity. Its “3D UNIV+RSES” strategy, unveiled in early 2025, positions the 3DEXPERIENCE platform not as an engineering tool, but as the operating system for the “Generative Economy”. This vision encompasses everything from the molecular design of new therapeutics (via BIOVIA) to the urban planning of smart cities (via GEOVIA) and the manufacturing of consumer goods. Dassault’s bet is on Sovereignty and Knowledge. By owning the infrastructure (OUTSCALE) and the data models (IP Lifecycle Management), Dassault aims to be the trusted repository for the world’s intellectual property in an era of AI insecurity.

1.3 Agentic AI meets integration: The next frontier

Ok, let’s finalise Part I with an article I stumbled up during my morning routine. Meanwhile, I got a lot of management tasks but to stay technically up to date, I regularly read thenewstack.io, which i can highly recommend. The article Agentic AI meets integration: The next frontier took immediately my attention in this ongoing SaaSmageddon reality, we can experience right now on the stock market. The article explores the convergence of Agentic AI—artificial intelligence capable of autonomous reasoning, decision-making and action—with the field of system integration. The author argues that as software architectures (like microservices and distributed systems) become increasingly complex, traditional manual integration methods are no longer effective. Agentic AI represents the next evolutionary step, moving beyond passive automation to active, autonomous management of data and workflows. The article’s core thesis can basically be summarised in 4 statements:

The Shift to Autonomy: Unlike traditional AI (which often just predicts or generates content), Agentic AI can independently navigate systems, make decisions and execute tasks with minimal human intervention.

Integration as the Critical Backbone: For AI agents to function effectively, they require a robust integration strategy. They need seamless access to data and APIs across the entire enterprise to “see,” “think,” and “act.” Without this connectivity, agents are isolated and ineffective.

Dynamic Adaptation: Agentic AI can handle the “frequent changes” inherent in modern DevOps and distributed architectures. Instead of hard-coded connections, these agents can adapt to shifting environments, helping teams navigate complexity with greater agility.

The “Human-in-the-Loop” Balance: While the goal is autonomy, the article points out that humans must remain involved to set boundaries, ensuring governance and trust, a concept we used to call “governed agency.”

The author argues that organizations cannot simply layer Agentic AI on top of already available fragmented systems; they must fundamentally treat integration as the enabler of autonomy. This is no easy task and needs to be planned and executed very well. It’s more than immediately cancel all SaaS subscriptions and let Agents do the work. The future lies in systems where integration is not just a utility, but the intelligent fabric that allows AI agents to scalably drive business outcomes.

This article fully supports Prof. Buehler’s thesis, which we will discuss in the following chapters.

Part II: The Agentic AI Thesis in Materials Science

2.1 Beyond Generative: The Rise of the Scientific Agent

The transition from “Generative AI” to “Agentic AI” represents the single most significant technological inflection point for materials science in the last half-century, according to Buehler’s work. While generative models (like GPT-4) can synthesize text or images based on statistical methods, they lack an internal model of physical reality. They hallucinate. In software and engineering, a hallucinated material property is not just an error; it is a liability, aka Physics-Informed AI.

Agentic AI solves this by embedding the language model within a system of tools, memory, and reasoning loops. These agents do not just “guess” an answer; they formulate a hypothesis, plan a simulation to test it, execute the simulation, analyze the results, and refine the hypothesis—all autonomously.

2.2 The Theoretical Framework: Prof Markus Buehler’s Graph Reasoning

The theoretic model for this shift has been established by Professor Markus J. Buehler at MIT. His work throughout 2024 and 2025 demonstrates that the future of discovery lies not just in bigger models, but in structured reasoning.

2.2.1 Self-Organizing Knowledge Networks

In his 2025 paper, “Agentic Deep Graph Reasoning Yields Self-Organizing Knowledge Networks,” Buehler challenges the reliance on static databases. Traditional materials informatics relies on fixed datasets (like the Materials Project). Buehler’s agents, however, construct dynamic knowledge graphs to reveal hidden patterns across different domains:

Mechanism: The agent uses a large language model to parse scientific literature or simulation data. Instead of storing this as text, it extracts entities (e.g., “Graphene,” “Young’s Modulus”) and relationships (e.g., “increases,” “correlates with”) to build a graph.

Self-Organization: Crucially, the graph is not static. As the agent encounters new data—perhaps contradicting earlier findings—it rewires the graph. This “self-organizing” capability allows the AI to maintain a coherent, up-to-date internal model of physics that evolves with discovery.

Graph-PRefLexOR: This architecture is powered by “Graph-PRefLexOR,” a specialized 3-billion-parameter model designed to reason over these graphs. Unlike standard LLMs which struggle with multi-hop causal chains, Graph-PRefLexOR can traverse the graph to find non-obvious connections, effectively “reasoning” about material behavior.

Source: https://www.researchgate.net/scientific-contributions/Alireza-Ghafarollahi-2258659058

2.2.2 The “Teacherless” Paradigm

A profound implication of Buehler’s work is the concept of “teacherless” learning. In traditional machine learning, models are trained by humans (supervision). In the agentic framework, the “teacher” is the physical law itself.

Hypergraph Topology: The system uses the topology of the knowledge hypergraph as a “verifiable guardrail.” If an agent generates a hypothesis that violates the topological constraints of the graph (which encode physical laws), the hypothesis is rejected before resources are wasted on simulation. This internal consistency check allows the agent to learn without human supervision, accelerating discovery in “teacherless” environments.

2.3 Applied Agentic Science: From Alloys to Proteins

The theoretical advantages of graph reasoning has been translated into concrete results in two primary domains: metallurgy and biology.

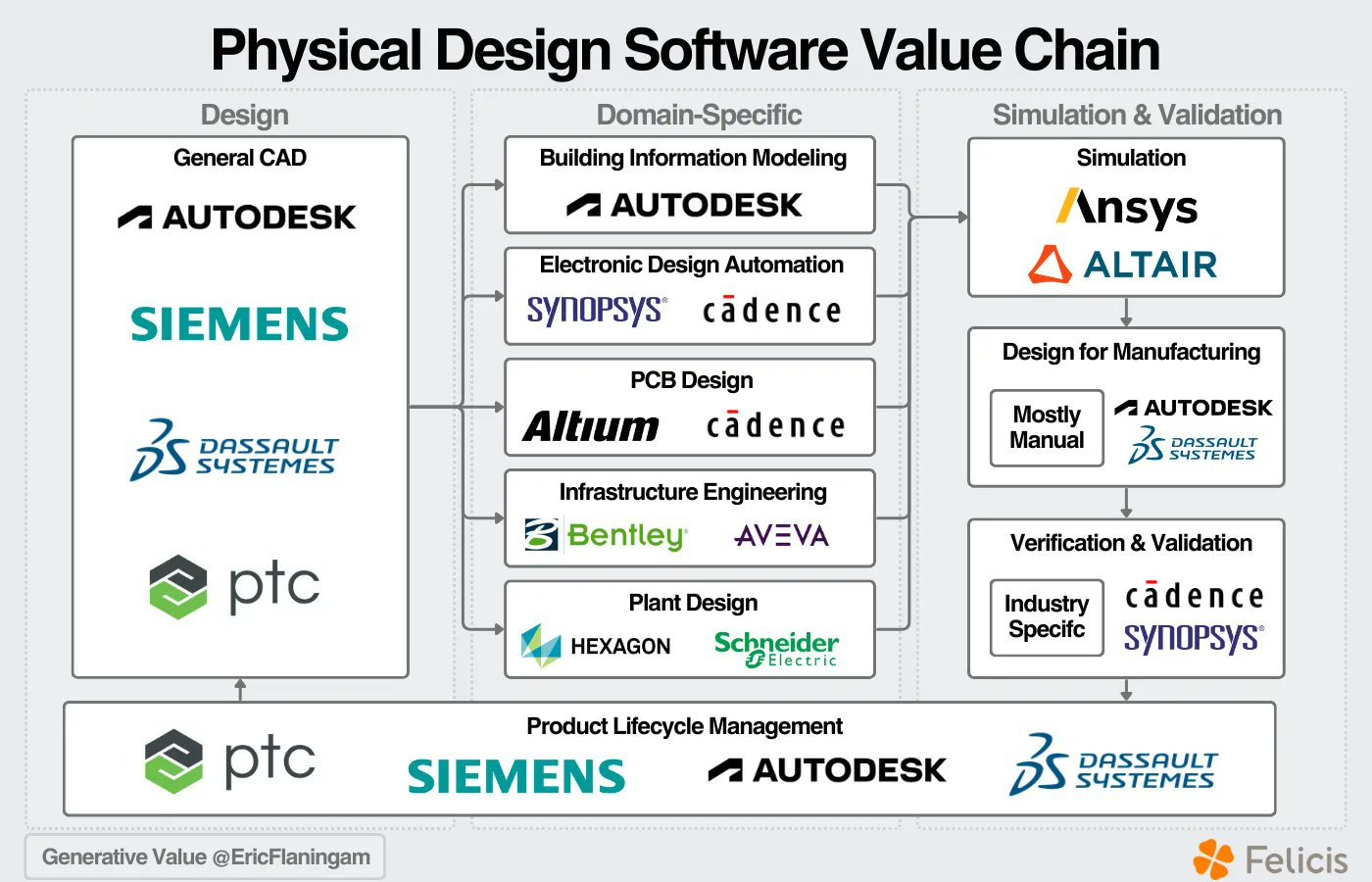

2.3.1 Autonomous Alloy Discovery (The MPEA Challenge)

Multi-Principal Element Alloys (MPEAs) offer vast design spaces that are impossible to explore via brute force. In collaboration with Alireza Ghafarollahi, Buehler developed a multi-agent system to navigate this space.

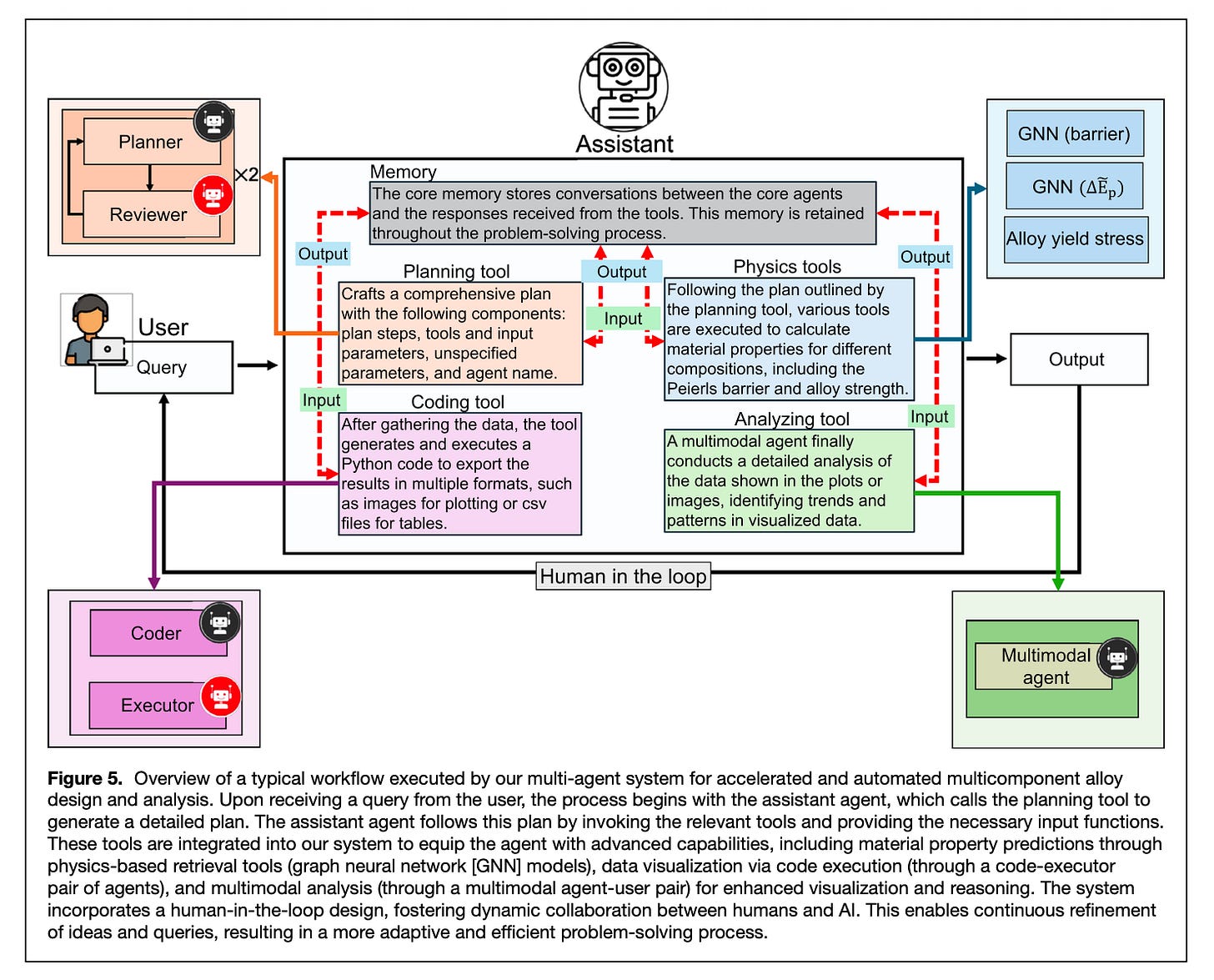

The Architecture: The system employs a “swarm” of specialized agents.

The Planner: An LLM-based agent that defines the search strategy (e.g., “Focus on Nb-Mo-Ta alloys for high-temperature stability”).

The Simulator: A specialized agent that executes Graph Neural Network (GNN) predictions.

The Analyst: An agent that interprets the results and feeds them back to the Planner.

The Breakthrough: The team developed a GNN capable of predicting the Peierls barrier—the energy required to move a dislocation through a crystal lattice—orders of magnitude faster than Density Functional Theory (DFT). By coupling this fast GNN with the reasoning agent, the system could screen millions of compositions, identifying alloys with optimal yield stress and ductility without human guidance.

Source: https://www.researchgate.net/scientific-contributions/Alireza-Ghafarollahi-2258659058

All data and codes are available on GitHub at https:// github.com/lamm-mit/AlloyAgents if you want to dig even deeper here.

I will do a separate Deep Dive about the paper’s results. I will keep it for now in the context to the research process itself.

2.3.2 “Sparks”: Multi-Agent Artificial Intelligence Model Discovers Protein Design Principles

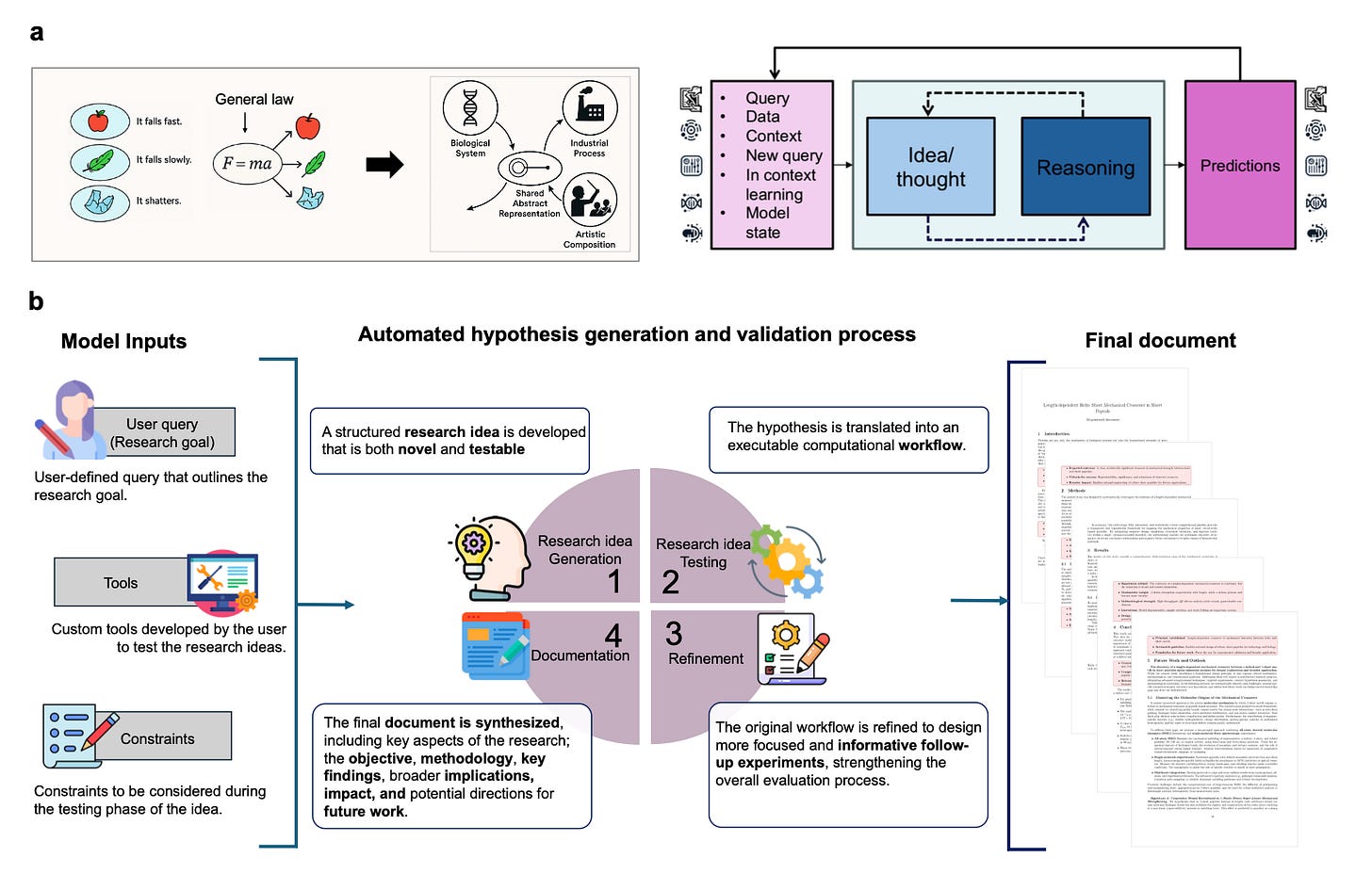

While most AI systems merely resurface existing training data, Sparks enables true autonomous discovery by executing an entire research cycle—from hypothesis to final report—without human help. In protein science, Sparks provided the critical spark of insight needed to uncover previously unknown mechanical crossovers and stability maps, proving that multi-agent models can independently identify new scientific principles.

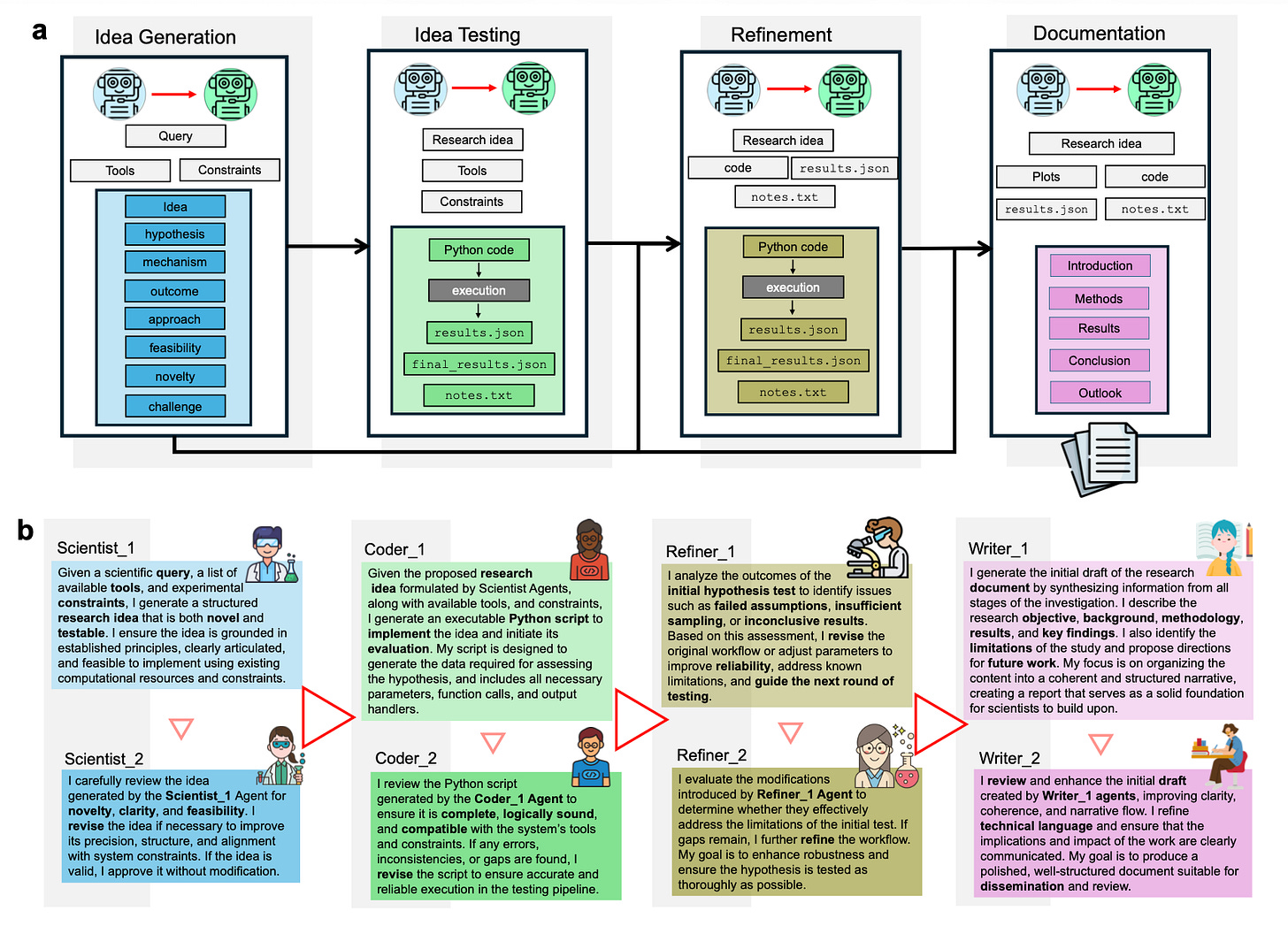

Overview of Sparks, a multi-agent AI model for automated scientific discovery. Panel a: Contemporary AI systems excel at statistical generalization within known domains, but rarely generate or validate hypotheses that extend beyond prior data, and cannot typically identify shared principles across distinct phenomena. This is because powerful models tend to memorize physics without discovering shared concepts. For scientific discovery, however, the elucidation of more general and shared foundational concepts (such as a scaling law, design principle, or crossover) is critical, in order to create significantly higher extrapolation capacity. Panel b: Sparks automates the end-to-end scientific process through four interconnected modules: 1) hypothesis generation, 2) testing, 3) refinement, and 4) documentation. The system begins with a user-defined query, which includes research goals, tools to test the hypothesis, and experimental constraints to guide the experimentation. It then formulates an innovative research idea with a testable hypothesis, followed by rigorous experimentation and refinement cycles. All findings are synthesized into a final document that captures the research objective, methodology, results, and directions for future work, in addition to a shared principle (such as in the examples presented here a scaling law or mechanistic rule). Each module is operated by specialized AI agents with clearly defined, synergistic roles.

Buehler’s idea generation process works as described:

In (b)

Overview of the AI Agents and their role implemented in Sparks. Each module operates through a structured yet adaptive sequence of agent interactions, enabling consistency and context-aware responses across the research workflow. Each agent dynamically adapts to previous content in real time, ensuring. Inter-modular agents facilitate a generation–reflection strategy, using dynamic prompts to process evolving inputs and coordinate outputs, ensuring the system adapts fluidly to new insights throughout the research process.

Now we know the theory, we can move on to the industrialisation.

2.4 Corporate Implementation: The Industrial Response

The industrial software giants have not sat idly by. They are aggressively integrating these academic studies into their commercial platforms.

2.4.1 Dassault Systèmes: BIOVIA and the “Scientific AI”

Dassault’s response to the agentic thesis is centered on its BIOVIA brand, specifically the Generative Therapeutics Design (GTD) solution.

The V+R Loop: GTD operationalizes the agentic loop by connecting the “Virtual” (in silico) with the “Real” (wet lab). Virtual agents screen billions of compounds using machine learning models trained on proprietary data. The most promising candidates are sent to robotic labs for synthesis. The experimental data is then fed back into the system, retraining the models in an automated “Active Learning” cycle.

Scientific AI: Dassault distinguishes its offering as “Scientific AI.” Unlike open-source models trained on the internet, Dassault’s agents are trained on curated, scientifically validated datasets managed within the 3DEXPERIENCE platform. This ensures that the agents do not “hallucinate” chemical structures that violate valency rules or thermodynamic laws.

2.4.2 Synopsys: SimAI and the Physics of Systems

Synopsys, through Ansys, approaches agentic AI from the perspective of continuum physics and wants to solve the hallucination (Physics-Informed AI) with the following, 3-step procedure:

Physics-Locked Generation: Ansys solvers act as “real-time guardians.” Every AI design proposal is instantly checked for physical viability (thermal, mechanical). If it violates physics, it is rejected automatically.

Deterministic Verification: The “guesswork” of AI is replaced by Ansys Simulation Engines. While AI suggests patterns, the software calculates exact electron behavior and heat dissipation.

Omniverse Integration: Through a partnership with NVIDIA Omniverse, designs are validated in a physically accurate virtual environment to ensure the digital model matches the real world perfectly.

Note: Synopsys is part of NVIDIA’s broader ecosystem. My Podcast addresses this very important aspect in detailed manner:

Part III: SaaSmageddon and the Death of the Seat

3.1 The Economic Crisis of AI Automation

The rise of Agentic AI has triggered a crisis in software monetization, which industry observers have termed “SaaSmageddon”. The traditional “per-seat” licensing model—where a human user pays a fixed fee for access—is fundamentally incompatible with AI workflows.

The “User” Paradox: In an agentic workflow, the “user” is not a human; it is a script. A single engineer might deploy a swarm of 50 agents to explore a design space. If the software is licensed per seat, the customer would need 50 licenses, which is cost-prohibitive. If the vendor allows the agents to run on a single seat, the vendor provides infinite value (massive compute/results) for a fixed price, destroying their own margins.

Infrastructure Costs: Agentic AI requires massive computational resources (GPU inference, high-fidelity validation). Vendors cannot bundle these variable costs into a flat subscription fee.

3.2 The Rise of Consumption Models (Tokens and Credits)

To survive SaaSmageddon, both Synopsys and Dassault have aggressively pivoted to consumption-based models. These models decouple “access” from “value,” charging customers based on the intensity of their compute usage.

3.2.1 Ansys Elastic Licensing (AEC/AEU)

Synopsys has adopted and expanded the Ansys Elastic Licensing framework, which utilizes Ansys Elastic Currencies (AEC) and Ansys Elastic Units (AEU). This model is arguably the most “agent-ready” in the industry.

Granular Consumption: Different solvers consume credits at different rates. For instance, running the Ansys Fluent CFD solver consumes approximately 28 AEC/hour. Running Ansys Mechanical consumes 24 AEC/hour, according to Ansys credit management.

HPC Scaling: The model explicitly monetizes parallelization. If an agent spins up a job on 100 cores to get a faster result, the credit consumption scales according to a non-linear formula (e.g., int(7*(n-4)^0.57)), capturing the value of speed.

Hardware/Software Bundling: A critical innovation is that AECs can cover both the software license and the cloud hardware cost (managed via Azure/AWS). This removes the friction of procuring separate cloud infrastructure for AI agents.

3.2.2 Dassault Systèmes: The Unified Licensing Model (ULM)

Dassault’s response is the Unified Licensing Model (ULM), centered on the 3DEXPERIENCE platform. This model uses a dual-currency system: Tokens and Credits (SimUnits).

Tokens (Concurrent Access): Tokens are used to “check out” a license for a specific tool. For example, a user might need 25 tokens to open the Abaqus solver. When the job is done, the tokens are returned to the pool for another user (or agent) to use. This facilitates flexible, concurrent usage.

Credits (Consumable Compute): SimUnits (Credits) are “burned” for cloud computing. Similar to Ansys, the burn rate depends on the complexity of the solver and the number of cores used.

Friction Points: Unlike Ansys’s purely elastic model, Dassault’s ULM is still heavily tied to “Roles.” A user (or agent) must essentially “be” a specific persona (e.g., “Mechanical Analyst”) to access the tokens. This legacy identity requirement creates friction for fully autonomous, headless agents compared to Ansys’s utility-style model.

3.3 Financial Implications of the Shift

The transition to these models is creating significant noise in financial reporting, complicating year-over-year comparisons.

Revenue Recognition: Under seat-based models (especially term licenses), revenue is often recognized upfront (ASC 606). Under consumption models, revenue is recognized as consumed. This creates a short-term “air pocket” in revenue growth as customers switch from large upfront deals to pay-as-you-go pools.

Dassault’s Struggle: This phenomenon is visible in Dassault’s Q3 2025 results (Press Release), where “Licenses and other software revenue” declined by 13%. However, the company highlighted a massive 36% surge in 3DEXPERIENCE Cloud revenue, confirming that while the legacy model is shrinking, the consumption model is growing rapidly. The playbook to the cloud revenue shift is very similar to SAP’s proceed a few years ago. The market didn’t recognized that in SAP’s stock price for years just to shoot up massively at some point.

Synopsys’s Resilience: Synopsys has navigated this transition more smoothly due to its historical reliance on Time-Based Licenses (TBL) in EDA, which are already ratable. The addition of Ansys’s AEC model provides a seamless mechanism to monetize the incremental compute demand from AI agents without disrupting the core EDA revenue stream.

Part IV: Financial Performance Analysis in January 2026

4.1 Synopsys: The Financial Superpower

The completion of the Ansys merger has transformed Synopsys into a financial juggernaut with a complex balance sheet to manage.

4.1.1 Post-Merger Financial Structure

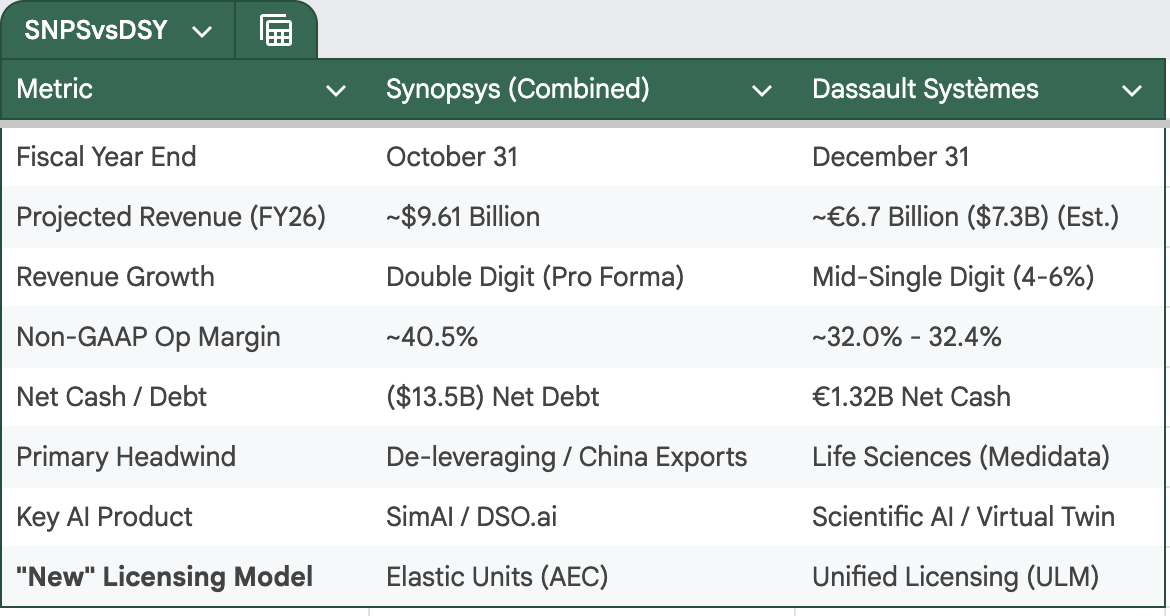

Revenue Outlook: For Fiscal Year 2026, the combined entity has guided for total revenue between $9.56 billion and $9.66 billion. This includes an expected $2.9 billion contribution from Ansys, growing at double-digit rates.

Synergies: The deal is projected to be accretive to non-GAAP EPS in the first full year. Synopsys targets $400 million in run-rate cost synergies by year three and another $400 million in revenue synergies by year four.

Margins: The integration of Ansys’s high-margin business is a tailwind. Synopsys projects FY2026 non-GAAP operating margins to expand to 40.5%, a significant leap from the ~35% range of the standalone EDA business.

4.1.2 The Debt Challenge

The $35 billion acquisition was funded through a mix of cash and stock, leaving Synopsys with a substantial debt load.

Total Debt: As of the close of FY2025, total debt stood at approximately $13.5 billion.

De-leveraging: Management has prioritized rapid de-leveraging. The company repaid $850 million in Q4 2025 and $900 million in November 2025. It plans to prepay the remaining $2.55 billion of its term loans in the first half of 2026. This aggressive repayment schedule is supported by strong free cash flow, projected at $1.9 billion for FY2026.

4.2 Dassault Systèmes: Resilience Amidst Transition

Dassault Systèmes enters 2026 with a solid but slower-growth profile, weighed down by specific sector weakness but buoyed by its industrial core.

4.2.1 2025 Performance Review

Revenue: For the first nine months of 2025, Dassault reported revenue of €4.56 billion, up 5% in constant currencies.

Guidance Adjustment: The company lowered its full-year 2025 revenue growth outlook to 4-6% (down from 6-8%), reflecting a challenging macro environment and specific sector headwinds.

Earnings: Despite the top-line adjustment, profitability remains elite. Non-IFRS operating margin reached 30.1% YTD, and the company reaffirmed its EPS growth target of 7-10% for the full year (€1.31 - €1.35).

4.2.2 The Life Sciences Headwind

The primary drag on Dassault’s performance has been the Life Sciences segment.

Medidata Weakness: Revenue in Life Sciences fell 1% YTD and 3% in Q3 2025. This is attributed to a contraction in clinical trial study starts, a “post-COVID hangover” affecting the biotech sector.

Contrast with Industrial: In stark contrast, the Industrial Innovation segment (Manufacturing) grew 8% YTD, proving the resilience of the core engineering business even in a soft economy.

4.2.3 Balance Sheet Strength

Dassault maintains a fortress balance sheet.

Net Cash Position: As of September 30, 2025, the company held a net cash position of €1.32 billion.

Capital Allocation: This liquidity allows Dassault to continue strategic M&A (such as the €189 million acquisition of ContentServ) and share buybacks (€337 million YTD) without straining its finances.

Part V: Strategic Divergence and the 2026 Outlook

5.1 Synopsys: The “Machine of the Future”

Synopsys’s strategy is clear: Vertical Domination. By combining EDA with Ansys’s physics simulation, it creates a closed loop for the design of intelligent systems.

3DIC & Chiplets: The immediate battlefield is multi-die systems. The integration of Synopsys 3DIC Compiler with Ansys RedHawk-SC allows for the co-design of chip architecture and packaging thermal management, a critical requirement for AI accelerators (like NVIDIA’s Rubin architectures).

Digital Twins: Synopsys now offers the “Digital Twin of the Silicon” and the “Digital Twin of the Physics.” This combined offering is indispensable for automotive and aerospace clients who need to validate software-defined vehicles in a virtual environment before cutting metal.

5.2 Dassault Systèmes: The “World of the Future”

Dassault’s strategy is Horizontal Expansion. The “3D UNIV+RSES” strategy is an attempt to escape the “engineering software” box and become a platform for the entire “Generative Economy.”

IP Lifecycle Management (IPLM): Dassault is rebranding PLM as IPLM. The argument is that in an AI world, the value isn’t the CAD file; it’s the knowledge encoded in the file. The 3DEXPERIENCE platform claims to secure and manage this knowledge better than any competitor.

Sovereignty as a Moat: A critical differentiator is 3DS OUTSCALE, Dassault’s sovereign cloud. As AI regulation (like the EU AI Act) tightens, Dassault positions itself as the only major vendor offering a “Trusted,” sovereign environment for industrial AI, explicitly contrasting itself with US-based hyperscalers.

5.3 Side Note - The Yellow Page Scandal: Why Dassault Systèmes is Not the Scapegoat

Every sunday evening, I watch the latest episode of Sicherheit & Verteidigung, a german YouTube channel about security & defence. I’ve been curious about this topic since some years. Long story short: A report a few months ago was about the failure of the F126 frigates which caught immediately my attention. And Dassault Systèmes was presented like the guilty one.

What happened: The ongoing crisis in German naval shipbuilding—specifically the failures of the F125 and F126 frigate programs—offers a critical case study for the limits of technology in the face of organizational chaos. While the prevailing narrative blames “software errors” and an inability to master Dassault Systèmes’ 3DExperience platform, some analysts revealed, that the software is merely a messenger delivering bad news: the organizational processes feeding it are broken.

This distinction is vital for understanding the future of complex industrial design. If a rigid, rule-based environment like Dassault’s cannot prevent failure due to poor data governance, the notion that unstructured “AI agents” can simply handle such complexity is a dangerous fallacy.

5.3.1 The “Software Bug” as a Symptom of Data Anarchy

The central failure mechanism in both frigate programs was not the code, but the integrity of the data provided to the code.

The F125 Weight Disaster: The Baden-Württemberg class suffered a humiliating 1.3-degree list and overweight issues. This was widely attributed to a “calculation error” in the software. In reality, the software correctly calculated the weight based on the data it was given. The error stemmed from a “data silo” problem where two different shipyards (TKMS and Lürssen) used unsynchronized databases, and engineers frequently assigned “generic” material properties (with incorrect densities) to components in the CAD model to save time. The software obeyed the laws of physics; the organization did not obey the laws of data discipline.

The F126 Interface Lead Balloon: The current 40-month delay on the F126 project is officially blamed on “IT interface issues” between the Dutch designer (Damen) and the German builder (NVL). The claim is that Dassault’s software cannot “talk” to NVL’s legacy systems. This is an Architectural Governance Failure, not a software bug. The project leaders signed a multi-billion Euro contract without defining a Common Data Environment (CDE) or valid exchange standards. They attempted to integrate a modern Model-Based Systems Engineering (MBSE) suite with legacy production tools without a translation layer, effectively trying to fit a square digital peg into a round analog hole.

5.3.2 The Control Group: Proof of Viability

The definitive proof that the software is not the problem lies in the success of Germany’s competitors using the exact same tools.

Naval Group (France): A direct competitor, Naval Group, has standardized its entire operation on the Dassault 3DExperience platform. They successfully deliver FDI frigates and Barracuda-class submarines using these tools because they enforce a “Single Source of Truth” within a vertically integrated supply chain.

The Variable is Governance: The software works when the organization is disciplined. It fails in Germany because of a fragmented consortium structure that lacks a unified data standard and attempts to “Gold-Plate” requirements with custom code rather than adapting to the tool’s standard configuration.

5.3.3 Conclusion: The AI Illusion and the Necessity of “The Controlled Environment”

The German frigate crisis serves as a big warning for the “AI era.” The allure of Generative AI and autonomous agents often promises that technology can bridge gaps in human process—that an AI can “figure out” the mess.

The reality of the F125 and F126 projects proves the opposite: Complexity requires control.

Garbage In, Garbage Out: If human engineers cannot maintain a clean database of material densities, an AI model trained on that data will simply hallucinate a listing ship at a faster rate. The “Phantom Teachers” database error referenced in the analysis demonstrates that without skilled “data stewards,” complex systems degrade into incoherence.

The Value of Rigidity: Dassault Systèmes offers a controlled environment—a rigorous MBSE framework that forces users to define relationships, interfaces, and physical properties accurately. The German failure occurred because they fought against this rigidity, attempting to bypass the discipline the tool required.

Beyond “Just AI”: AI agents thrive on pattern recognition, but industrial engineering relies on physics and hard constraints. You cannot “prompt” a hull to be watertight if the underlying CAD geometry is non-manifold due to a data migration error.

The software did not fail; it accurately reflected a broken process. Future success in complex engineering lies not in deploying “smarter” AI to mask these gaps, but in embracing the structure of platforms like Dassault Systèmes that force organizations to be honest with their data.

5.4 Comparative Financial Outlook Table

The following table summarizes the financial standing of both companies as of the start of 2026.

Source: Company Financial Reports

5.4 Conclusion: The Agentic Winner?

As we move deeper into 2026, Synopsys appears to have the tactical advantage. Its “elastic” licensing model is better adapted to the immediate needs of Agentic AI, and its portfolio addresses the most urgent hardware challenges (3DIC/AI chips). It has successfully set sail to integrate the physics engine (Ansys) required to validate agentic designs.

Dassault Systèmes, however, plays a longer game. If the “Agentic Materials Science” thesis holds true—that biology and chemistry will drive the next wave of industrial innovation—Dassault’s BIOVIA division and its “Scientific AI” approach may eventually eclipse the electronics market in value. But for now, they must navigate the painful transition of their business model while waiting for the life sciences market to recover. This is exactly the time you want to accumulate share.

The time of the human “user” is ending. The age of the “agents” has arrived. The spoils will go to the platform that can feed these agents the data, compute, and physics they need to build the new world.

Personal Note: I own both stocks and will pick up even more, when share prices drop further. Given this fact, I am positive biased in this context. I own SYNOPSYS in a broader AI Semi basket and Dassault Systèmes in a broader Life Science basket. Both stocks do have a specific role in those portfolios.

Hope you enjoyed it ❤️

Important Disclaimer

The content published on this Substack, including all articles, analyses, opinions, predictions, recommendations, and comments, represents solely the personal views and opinions of the author. It is provided for general informational and educational purposes only and does not constitute professional investment advice, financial advice, trading advice, tax advice, legal advice, or any other form of regulated advice. Nothing herein should be construed as a recommendation to buy, sell, hold, or invest in any specific asset, security, cryptocurrency, or financial instrument. The author is not a registered investment advisor, broker-dealer, or financial professional, and no fiduciary relationship is created or implied. Investing in financial markets, including cryptocurrencies and digital assets, involves substantial risk, including the potential for complete loss of principal. Past performance is not indicative of future results. All investments carry risk, and readers should carefully consider their own financial situation, risk tolerance, and objectives before making any investment decisions. Readers are strongly encouraged to conduct their own independent research (DYOR – Do Your Own Research) and consult with qualified professional advisors before acting on any information provided here. The author and this publication assume no responsibility or liability whatsoever for any errors, omissions, or inaccuracies in the content, or for any actions taken in reliance thereon, including any direct, indirect, incidental, or consequential losses or damages. By reading this Substack, you agree to bear full responsibility for your own investment decisions and outcomes.

Additional Sources:

Original source: 0000883241-25-000028

Original source: 466c99bb-d30d-4265-bdaf-c16c1ada58a6

https://news.synopsys.com/2025-07-17-Synopsys-Completes-Acquisition-of-Ansys

https://dspace.mit.edu/bitstream/handle/1721.1/162585/3701716.3718485.pdf?sequence=1&isAllowed=y

https://www.researchgate.net/scientific-contributions/Markus-J-Buehler-38748236

https://www.semanticscholar.org/paper/81fee2fd4bc007fda9a1b1d81e4de66ded867215

https://www.3ds.com/products/biovia/generative-therapeutics-design

https://altem.com/wp-content/uploads/2022/02/generative-therapeutics-design-datasheet-biovia.pdf

https://medium.com/@medidimervyn/how-ansys-simai-redefines-the-game-97ac13bd0e9b

https://www.techbriefs.com/component/content/article/52765-doc-9591

https://paid.ai/blog/ai-monetization/notes-on-where-seat-based-pricing-is-going

https://www.inas.ro/files/docs/ansys/ansys-elastic-currency-software-consumption-rate-table-6.pdf

https://www.ansys.com/news-center/press-releases/1-16-24-synopsys-acquires-ansys

https://iitmpravartak.org.in/home/pdf/cadfem_Electrification_Advantage_Magazine.pdf

https://www.ansys.com/news-center/press-releases/5-22-24-ansys-stockholders-approve-acquisition